SAP Hana DB Disk Persistence –Shrink HANA Data volume

- For example, if the original database size is 1 TB, then it increases to about 1.5 GB during the conversion process and after that only the size reduces to about 600 - 650 GB. Most organizations do not take this fact into account and buy a much lower size of the HANA box.

- The compressed table size is the size occupied by the table in the main memory of SAP HANA database. Check Compression of a Column Table Using SAP HANA Studio, you can find out the compression status of a column store table and also the compression factor.

This blog is all about how to remove/ free up unused space inside an SAP HANA database disk persistence.

I would like to share my experience on how to perform the the Shrink of SAP HANA Data volume size which helps to free up the unused space inside an SAP HANA database disk persistence, reduce fragmentation on disk level and reclaim disk space.

A complete retail management solution. MYOB RetailManager brings together your sales, inventory and customer service in one advanced software system. RetailManager is designed, developed and tested in collaboration with thousands of existing retailers. You can customise sales screens, customise your payment types, and even skip fields to make. Myob retail manager crack software.

The recommended size of the data volume for a given SAP HANA system is equal to the anticipated net disk space for dataaccording to the definition above plus an additional free space of 20%.

The defragmentation happens online without blocking accesses to tables.

Prerequisites:

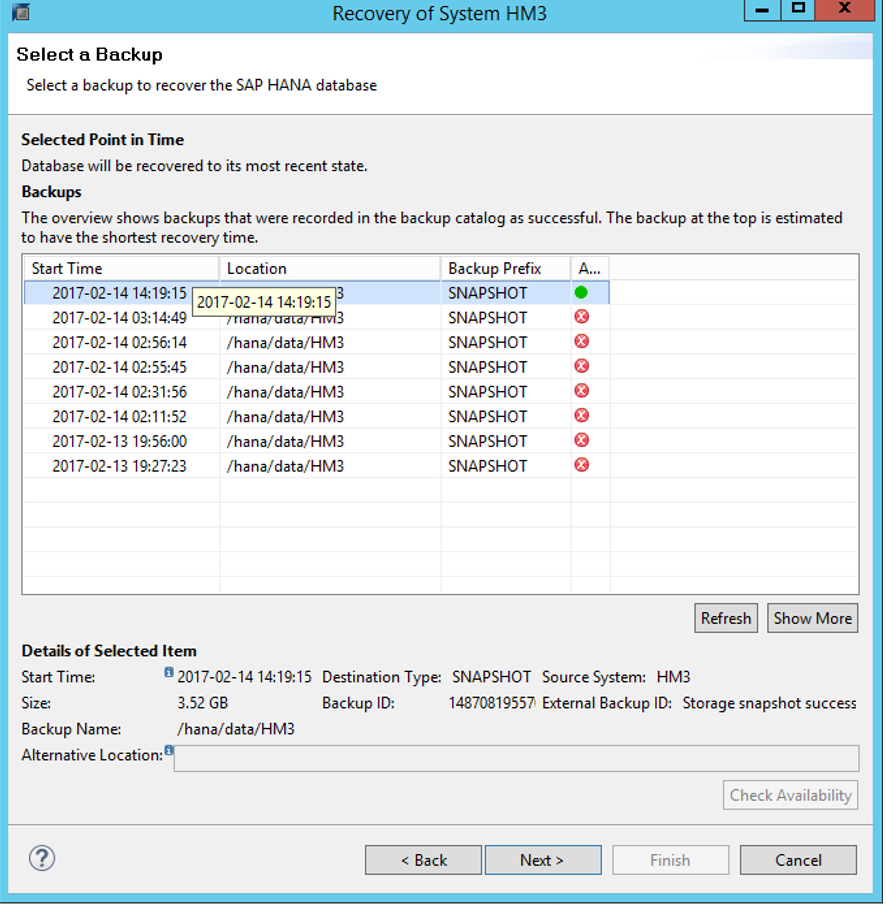

->Make sure we have latest successful backup of the SAP system.

->Before executing Disk Fragmentation we can see unused size of data file using the SQL statement (Hana_Disks_Overview), yoo can have latest SQL statements from the note 1969700 – SQL Statement Collection for SAP HANA

Syntax:

All this ALTER does is 'Frees unused space inside an SAP HANA database disk persistence'.

ALTER SYSTEM RECLAIM DATAVOLUME [SPACE] []

Specifies the server on which the size of the persistence should be reduced.

::= ‘:'

> If is omitted, then the statement is distributed to all servers with the persistence.

<<< OR >>>

The following statement defragments the persistence of all servers in the landscape and reduces them to 120% of the payload size.

ALTER SYSTEM RECLAIM DATAVOLUME 120 DEFRAGMENT

These instructions are as per SAP HANA SQL and System Views Reference. This statement reduces data volume size to a percentage of payload size. This statement works in a similar way to fragment of a hard drive. Pages that are scattered around a data volume are moved to the front of the volume, and the free space at the end of the data volume is truncated.

Before the shrink:

In my case the percentage of unused space is high,hence I considered the shrinking of the volume by executing below command which is specific to the server.

ALTER SYSTEM RECLAIM DATAVOLUME ‘:' 120 DEFRAGMENT

This statement reduces data volume size to a percentage of payload size. This statement works in a similar way to defragmenting a hard drive. Pages that are scattered around a data volume are moved to the front of the volume, and the free space at the end of the data volume is truncated.

The '120' indicates the fragmentation overhead that can remain, in this case 20 % of fragmentation on top of the existing 100 % data is acceptable. 120 is a reasonable value because due to temporary space requirements for table optimizations and garbage it is quite normal that 20 % of space is allocated and deallocated. Smaller values can significantly increase the defragmentation runtime and only provide limited benefit.

Result After the shrink:

Before Shrink UNUSED_SIZE was 453.67 GB and after shrink UNUSED_SIZE is 125.77 GB, it RECLAIMED 328 GB

Troubleshooting:

->The progress of defragmentation can be monitored via SQL: 'HANA_Disks_Data_SuperBlockStatistics'(SAP Note 1969700).

->The SAP HANA database trace (SAP Note 2380176) contains related messages as below:

Shrink DataVolume to % of payload size

Reclaim[0, ‘.dat']:: sizes= (used= mb / max=mb | file= mb), curOverhead= %,

maxOverhead= %, moving up to mb pages per cycle

DVolPart::truncate(payload= , maxSuperblockIndex= size= –> )

Reclaim[0, ‘.dat']:: #: truncated mb, sizes= (used= mb / max=mb |

file= mb), curOverhead= %, maxOverhead= %, s)

Reclaim[0, ‘.dat']: steps>: moved pages (mb) in interval [0mb, 0mb[, sizes= (used=

mb / max=mb | file= mb), curOverhead= %, maxOverhead= %, mb truncated (s)

->We can resume the RECLAIM task , so if it is terminated (e.g. due to 'general error: Shrink canceled, probably because of snapshot pages', SAP Note 1999880), it will continue next time at roughly the place where it stopped.

When RECLAIM is running in parallel to production load and modifications there is a certain risk of significant runtime overhead depending on the used SAP HANA Revision levels as below:

- SAP HANA 1.0: Rather high risk of runtime overhead

- SAP HANA 2.0 <= SPS 03: Reduced risk of runtime overhead

- SAP HANA 2.0 >= SPS 04: Further optimizations to reduce risk of runtime overhead

->We can also use the following SQL statement in order to find out which column store tables are occupying most of the space inside the volumes

select top 30 table_name, sum(physical_size) from M_TABLE_VIRTUAL_FILES

group by table_name

order by 2 desc

Reference:

->Note – 2499913 – How to shrink SAP HANA Data volume size

->Details:https://help.sap.com/viewer/4fe29514fd584807ac9f2a04f6754767/2.0.01/en-US/20d1e5e57519101486c7812a5a8990e8.html

-->Azure NetApp Files provides native NFS shares that can be used for /hana/shared, /hana/data, and /hana/log volumes. Using ANF-based NFS shares for the /hana/data and /hana/log volumes requires the usage of the v4.1 NFS protocol. The NFS protocol v3 is not supported for the usage of /hana/data and /hana/log volumes when basing the shares on ANF.

Important

The NFS v3 protocol implemented on Azure NetApp Files is not supported to be used for /hana/data and /hana/log. The usage of the NFS 4.1 is mandatory for /hana/data and /hana/log volumes from a functional point of view. Whereas for the /hana/shared volume the NFS v3 or the NFS v4.1 protocol can be used from a functional point of view.

Important considerations

When considering Azure NetApp Files for the SAP Netweaver and SAP HANA, be aware of the following important considerations:

- The minimum capacity pool is 4 TiB

- The minimum volume size is 100 GiB

- Azure NetApp Files and all virtual machines, where Azure NetApp Files volumes are mounted, must be in the same Azure Virtual Network or in peered virtual networks in the same region

- It is important to have the virtual machines deployed in close proximity to the Azure NetApp storage for low latency.

- The selected virtual network must have a subnet, delegated to Azure NetApp Files

- Make sure the latency from the database server to the ANF volume is measured and below 1 millisecond

- The throughput of an Azure NetApp volume is a function of the volume quota and Service level, as documented in Service level for Azure NetApp Files. When sizing the HANA Azure NetApp volumes, make sure the resulting throughput meets the HANA system requirements

- Try to 'consolidate' volumes to achieve more performance in a larger Volume for example, use one volume for /sapmnt, /usr/sap/trans, … if possible

- Azure NetApp Files offers export policy: you can control the allowed clients, the access type (Read&Write, Read Only, etc.).

- Azure NetApp Files feature isn't zone aware yet. Currently Azure NetApp Files feature isn't deployed in all Availability zones in an Azure region. Be aware of the potential latency implications in some Azure regions.

- The User ID for sidadm and the Group ID for

sapsyson the virtual machines must match the configuration in Azure NetApp Files.

Important

For SAP HANA workloads, low latency is critical. Work with your Microsoft representative to ensure that the virtual machines and the Azure NetApp Files volumes are deployed in close proximity.

Important

If there is a mismatch between User ID for sidadm and the Group ID for sapsys between the virtual machine and the Azure NetApp configuration, the permissions for files on Azure NetApp volumes, mounted to the VM, would be be displayed as nobody. Make sure to specify the correct User ID for sidadm and the Group ID for sapsys, when on-boarding a new system to Azure NetApp Files.

Sizing for HANA database on Azure NetApp Files

The throughput of an Azure NetApp volume is a function of the volume size and Service level, as documented in Service level for Azure NetApp Files.

Important to understand is the performance relationship the size and that there are physical limits for an LIF (Logical Interface) of the SVM (Storage Virtual Machine).

The table below demonstrates that it could make sense to create a large 'Standard' volume to store backups and that it does not make sense to create a 'Ultra' volume larger than 12 TB because the physical bandwidth capacity of a single LIF would be exceeded.

The maximum throughput for a LIF and a single Linux session is between 1.2 and 1.4 GB/s.

| Size | Throughput Standard | Throughput Premium | Throughput Ultra |

|---|---|---|---|

| 1 TB | 16 MB/sec | 64 MB/sec | 128 MB/sec |

| 2 TB | 32 MB/sec | 128 MB/sec | 256 MB/sec |

| 4 TB | 64 MB/sec | 256 MB/sec | 512 MB/sec |

| 10 TB | 160 MB/sec | 640 MB/sec | 1.280 MB/sec |

| 15 TB | 240 MB/sec | 960 MB/sec | 1.400 MB/sec |

| 20 TB | 320 MB/sec | 1.280 MB/sec | 1.400 MB/sec |

| 40 TB | 640 MB/sec | 1.400 MB/sec | 1.400 MB/sec |

It is important to understand that the data is written to the same SSDs in the storage backend. The performance quota from the capacity pool was created to be able to manage the environment.The Storage KPIs are equal for all HANA database sizes. In almost all cases, this assumption does not reflect the reality and the customer expectation. The size of HANA Systems does not necessarily mean that a small system requires low storage throughput – and a large system requires high storage throughput. But generally we can expect higher throughput requirements for larger HANA database instances. As a result of SAP's sizing rules for the underlying hardware such larger HANA instances also provide more CPU resources and higher parallelism in tasks like loading data after an instances restart. As a result the volume sizes should be adopted to the customer expectations and requirements. And not only driven by pure capacity requirements.

As you design the infrastructure for SAP in Azure you should be aware of some minimum storage throughput requirements (for productions Systems) by SAP, which translate into minimum throughput characteristics of:

| Volume type and I/O type | Minimum KPI demanded by SAP | Premium service level | Ultra service level |

|---|---|---|---|

| Log Volume Write | 250 MB/sec | 4 TB | 2 TB |

| Data Volume Write | 250 MB/sec | 4 TB | 2 TB |

| Data Volume Read | 400 MB/sec | 6.3 TB | 3.2 TB |

Since all three KPIs are demanded, the /hana/data volume needs to be sized toward the larger capacity to fulfill the minimum read requirements.

For HANA systems, which are not requiring high bandwidth, the ANF volume sizes can be smaller. And in case a HANA system requires more throughput the volume could be adapted by resizing the capacity online. No KPIs are defined for backup volumes. However the backup volume throughput is essential for a well performing environment. Log – and Data volume performance must be designed to the customer expectations.

Important

Independent of the capacity you deploy on a single NFS volume, the throughput, is expected to plateau in the range of 1.2-1.4 GB/sec bandwidth leveraged by a consumer in a virtual machine. This has to do with the underlying architecture of the ANF offer and related Linux session limits around NFS. The performance and throughput numbers as documented in the article Performance benchmark test results for Azure NetApp Files were conducted against one shared NFS volume with multiple client VMs and as a result with multiple sessions. That scenario is different to the scenario we measure in SAP. Where we measure throughput from a single VM against an NFS volume. Hosted on ANF.

To meet the SAP minimum throughput requirements for data and log, and according to the guidelines for /hana/shared, the recommended sizes would look like:

| Volume | Size Premium Storage tier | Size Ultra Storage tier | Supported NFS protocol |

|---|---|---|---|

| /hana/log/ | 4 TiB | 2 TiB | v4.1 |

| /hana/data | 6.3 TiB | 3.2 TiB | v4.1 |

| /hana/shared scale-up | Min(1 TB, 1 x RAM) | Min(1 TB, 1 x RAM) | v3 or v4.1 |

| /hana/shared scale-out | 1 x RAM of worker node per 4 worker nodes | 1 x RAM of worker node per 4 worker nodes | v3 or v4.1 |

| /hana/logbackup | 3 x RAM | 3 x RAM | v3 or v4.1 |

| /hana/backup | 2 x RAM | 2 x RAM | v3 or v4.1 |

For all volumes, NFS v4.1 is highly recommended

The sizes for the backup volumes are estimations. Exact requirements need to be defined based on workload and operation processes. For backups, you could consolidate many volumes for different SAP HANA instances to one (or two) larger volumes, which could have a lower service level of ANF.

Note

->Before executing Disk Fragmentation we can see unused size of data file using the SQL statement (Hana_Disks_Overview), yoo can have latest SQL statements from the note 1969700 – SQL Statement Collection for SAP HANA

Syntax:

All this ALTER does is 'Frees unused space inside an SAP HANA database disk persistence'.

ALTER SYSTEM RECLAIM DATAVOLUME [SPACE] []

Specifies the server on which the size of the persistence should be reduced.

::= ‘:'

> If is omitted, then the statement is distributed to all servers with the persistence.

<<< OR >>>

The following statement defragments the persistence of all servers in the landscape and reduces them to 120% of the payload size.

ALTER SYSTEM RECLAIM DATAVOLUME 120 DEFRAGMENT

These instructions are as per SAP HANA SQL and System Views Reference. This statement reduces data volume size to a percentage of payload size. This statement works in a similar way to fragment of a hard drive. Pages that are scattered around a data volume are moved to the front of the volume, and the free space at the end of the data volume is truncated.

Before the shrink:

In my case the percentage of unused space is high,hence I considered the shrinking of the volume by executing below command which is specific to the server.

ALTER SYSTEM RECLAIM DATAVOLUME ‘:' 120 DEFRAGMENT

This statement reduces data volume size to a percentage of payload size. This statement works in a similar way to defragmenting a hard drive. Pages that are scattered around a data volume are moved to the front of the volume, and the free space at the end of the data volume is truncated.

The '120' indicates the fragmentation overhead that can remain, in this case 20 % of fragmentation on top of the existing 100 % data is acceptable. 120 is a reasonable value because due to temporary space requirements for table optimizations and garbage it is quite normal that 20 % of space is allocated and deallocated. Smaller values can significantly increase the defragmentation runtime and only provide limited benefit.

Result After the shrink:

Before Shrink UNUSED_SIZE was 453.67 GB and after shrink UNUSED_SIZE is 125.77 GB, it RECLAIMED 328 GB

Troubleshooting:

->The progress of defragmentation can be monitored via SQL: 'HANA_Disks_Data_SuperBlockStatistics'(SAP Note 1969700).

->The SAP HANA database trace (SAP Note 2380176) contains related messages as below:

Shrink DataVolume to % of payload size

Reclaim[0, ‘.dat']:: sizes= (used= mb / max=mb | file= mb), curOverhead= %,

maxOverhead= %, moving up to mb pages per cycle

DVolPart::truncate(payload= , maxSuperblockIndex= size= –> )

Reclaim[0, ‘.dat']:: #: truncated mb, sizes= (used= mb / max=mb |

file= mb), curOverhead= %, maxOverhead= %, s)

Reclaim[0, ‘.dat']: steps>: moved pages (mb) in interval [0mb, 0mb[, sizes= (used=

mb / max=mb | file= mb), curOverhead= %, maxOverhead= %, mb truncated (s)

->We can resume the RECLAIM task , so if it is terminated (e.g. due to 'general error: Shrink canceled, probably because of snapshot pages', SAP Note 1999880), it will continue next time at roughly the place where it stopped.

When RECLAIM is running in parallel to production load and modifications there is a certain risk of significant runtime overhead depending on the used SAP HANA Revision levels as below:

- SAP HANA 1.0: Rather high risk of runtime overhead

- SAP HANA 2.0 <= SPS 03: Reduced risk of runtime overhead

- SAP HANA 2.0 >= SPS 04: Further optimizations to reduce risk of runtime overhead

->We can also use the following SQL statement in order to find out which column store tables are occupying most of the space inside the volumes

select top 30 table_name, sum(physical_size) from M_TABLE_VIRTUAL_FILES

group by table_name

order by 2 desc

Reference:

->Note – 2499913 – How to shrink SAP HANA Data volume size

->Details:https://help.sap.com/viewer/4fe29514fd584807ac9f2a04f6754767/2.0.01/en-US/20d1e5e57519101486c7812a5a8990e8.html

-->Azure NetApp Files provides native NFS shares that can be used for /hana/shared, /hana/data, and /hana/log volumes. Using ANF-based NFS shares for the /hana/data and /hana/log volumes requires the usage of the v4.1 NFS protocol. The NFS protocol v3 is not supported for the usage of /hana/data and /hana/log volumes when basing the shares on ANF.

Important

The NFS v3 protocol implemented on Azure NetApp Files is not supported to be used for /hana/data and /hana/log. The usage of the NFS 4.1 is mandatory for /hana/data and /hana/log volumes from a functional point of view. Whereas for the /hana/shared volume the NFS v3 or the NFS v4.1 protocol can be used from a functional point of view.

Important considerations

When considering Azure NetApp Files for the SAP Netweaver and SAP HANA, be aware of the following important considerations:

- The minimum capacity pool is 4 TiB

- The minimum volume size is 100 GiB

- Azure NetApp Files and all virtual machines, where Azure NetApp Files volumes are mounted, must be in the same Azure Virtual Network or in peered virtual networks in the same region

- It is important to have the virtual machines deployed in close proximity to the Azure NetApp storage for low latency.

- The selected virtual network must have a subnet, delegated to Azure NetApp Files

- Make sure the latency from the database server to the ANF volume is measured and below 1 millisecond

- The throughput of an Azure NetApp volume is a function of the volume quota and Service level, as documented in Service level for Azure NetApp Files. When sizing the HANA Azure NetApp volumes, make sure the resulting throughput meets the HANA system requirements

- Try to 'consolidate' volumes to achieve more performance in a larger Volume for example, use one volume for /sapmnt, /usr/sap/trans, … if possible

- Azure NetApp Files offers export policy: you can control the allowed clients, the access type (Read&Write, Read Only, etc.).

- Azure NetApp Files feature isn't zone aware yet. Currently Azure NetApp Files feature isn't deployed in all Availability zones in an Azure region. Be aware of the potential latency implications in some Azure regions.

- The User ID for sidadm and the Group ID for

sapsyson the virtual machines must match the configuration in Azure NetApp Files.

Important

For SAP HANA workloads, low latency is critical. Work with your Microsoft representative to ensure that the virtual machines and the Azure NetApp Files volumes are deployed in close proximity.

Important

If there is a mismatch between User ID for sidadm and the Group ID for sapsys between the virtual machine and the Azure NetApp configuration, the permissions for files on Azure NetApp volumes, mounted to the VM, would be be displayed as nobody. Make sure to specify the correct User ID for sidadm and the Group ID for sapsys, when on-boarding a new system to Azure NetApp Files.

Sizing for HANA database on Azure NetApp Files

The throughput of an Azure NetApp volume is a function of the volume size and Service level, as documented in Service level for Azure NetApp Files.

Important to understand is the performance relationship the size and that there are physical limits for an LIF (Logical Interface) of the SVM (Storage Virtual Machine).

The table below demonstrates that it could make sense to create a large 'Standard' volume to store backups and that it does not make sense to create a 'Ultra' volume larger than 12 TB because the physical bandwidth capacity of a single LIF would be exceeded.

The maximum throughput for a LIF and a single Linux session is between 1.2 and 1.4 GB/s.

| Size | Throughput Standard | Throughput Premium | Throughput Ultra |

|---|---|---|---|

| 1 TB | 16 MB/sec | 64 MB/sec | 128 MB/sec |

| 2 TB | 32 MB/sec | 128 MB/sec | 256 MB/sec |

| 4 TB | 64 MB/sec | 256 MB/sec | 512 MB/sec |

| 10 TB | 160 MB/sec | 640 MB/sec | 1.280 MB/sec |

| 15 TB | 240 MB/sec | 960 MB/sec | 1.400 MB/sec |

| 20 TB | 320 MB/sec | 1.280 MB/sec | 1.400 MB/sec |

| 40 TB | 640 MB/sec | 1.400 MB/sec | 1.400 MB/sec |

It is important to understand that the data is written to the same SSDs in the storage backend. The performance quota from the capacity pool was created to be able to manage the environment.The Storage KPIs are equal for all HANA database sizes. In almost all cases, this assumption does not reflect the reality and the customer expectation. The size of HANA Systems does not necessarily mean that a small system requires low storage throughput – and a large system requires high storage throughput. But generally we can expect higher throughput requirements for larger HANA database instances. As a result of SAP's sizing rules for the underlying hardware such larger HANA instances also provide more CPU resources and higher parallelism in tasks like loading data after an instances restart. As a result the volume sizes should be adopted to the customer expectations and requirements. And not only driven by pure capacity requirements.

As you design the infrastructure for SAP in Azure you should be aware of some minimum storage throughput requirements (for productions Systems) by SAP, which translate into minimum throughput characteristics of:

| Volume type and I/O type | Minimum KPI demanded by SAP | Premium service level | Ultra service level |

|---|---|---|---|

| Log Volume Write | 250 MB/sec | 4 TB | 2 TB |

| Data Volume Write | 250 MB/sec | 4 TB | 2 TB |

| Data Volume Read | 400 MB/sec | 6.3 TB | 3.2 TB |

Since all three KPIs are demanded, the /hana/data volume needs to be sized toward the larger capacity to fulfill the minimum read requirements.

For HANA systems, which are not requiring high bandwidth, the ANF volume sizes can be smaller. And in case a HANA system requires more throughput the volume could be adapted by resizing the capacity online. No KPIs are defined for backup volumes. However the backup volume throughput is essential for a well performing environment. Log – and Data volume performance must be designed to the customer expectations.

Important

Independent of the capacity you deploy on a single NFS volume, the throughput, is expected to plateau in the range of 1.2-1.4 GB/sec bandwidth leveraged by a consumer in a virtual machine. This has to do with the underlying architecture of the ANF offer and related Linux session limits around NFS. The performance and throughput numbers as documented in the article Performance benchmark test results for Azure NetApp Files were conducted against one shared NFS volume with multiple client VMs and as a result with multiple sessions. That scenario is different to the scenario we measure in SAP. Where we measure throughput from a single VM against an NFS volume. Hosted on ANF.

To meet the SAP minimum throughput requirements for data and log, and according to the guidelines for /hana/shared, the recommended sizes would look like:

| Volume | Size Premium Storage tier | Size Ultra Storage tier | Supported NFS protocol |

|---|---|---|---|

| /hana/log/ | 4 TiB | 2 TiB | v4.1 |

| /hana/data | 6.3 TiB | 3.2 TiB | v4.1 |

| /hana/shared scale-up | Min(1 TB, 1 x RAM) | Min(1 TB, 1 x RAM) | v3 or v4.1 |

| /hana/shared scale-out | 1 x RAM of worker node per 4 worker nodes | 1 x RAM of worker node per 4 worker nodes | v3 or v4.1 |

| /hana/logbackup | 3 x RAM | 3 x RAM | v3 or v4.1 |

| /hana/backup | 2 x RAM | 2 x RAM | v3 or v4.1 |

For all volumes, NFS v4.1 is highly recommended

The sizes for the backup volumes are estimations. Exact requirements need to be defined based on workload and operation processes. For backups, you could consolidate many volumes for different SAP HANA instances to one (or two) larger volumes, which could have a lower service level of ANF.

Note

The Azure NetApp Files, sizing recommendations stated in this document are targeting the minimum requirements SAP expresses towards their infrastructure providers. In real customer deployments and workload scenarios, that may not be enough. Use these recommendations as a starting point and adapt, based on the requirements of your specific workload.

Therefore you could consider to deploy similar throughput for the ANF volumes as listed for Ultra disk storage already. Also consider the sizes for the sizes listed for the volumes for the different VM SKUs as done in the Ultra disk tables already.

Tip

You can re-size Azure NetApp Files volumes dynamically, without the need to unmount the volumes, stop the virtual machines or stop SAP HANA. That allows flexibility to meet your application both expected and unforeseen throughput demands.

Documentation on how to deploy an SAP HANA scale-out configuration with standby node using NFS v4.1 volumes that are hosted in ANF is published in SAP HANA scale-out with standby node on Azure VMs with Azure NetApp Files on SUSE Linux Enterprise Server.

Availability

ANF system updates and upgrades are applied without impacting the customer environment. The defined SLA is 99.99%.

Volumes and IP addresses and capacity pools

With ANF, it is important to understand how the underlying infrastructure is built. A capacity pool is only a structure, which makes it simpler to create a billing model for ANF. A capacity pool has no physical relationship to the underlying infrastructure. If you create a capacity pool only a shell is created which can be charged, not more. When you create a volume, the first SVM (Storage Virtual Machine) is created on a cluster of several NetApp systems. A single IP is created for this SVM to access the volume. If you create several volumes, all the volumes are distributed in this SVM over this multi-controller NetApp cluster. Even if you get only one IP the data is distributed over several controllers. ANF has a logic that automatically distributes customer workloads once the volumes or/and capacity of the configured storage reaches an internal pre-defined level. You might notice such cases because a new IP address gets assigned to access the volumes.

##Log volume and log backup volumeThe 'log volume' (/hana/log) is used to write the online redo log. Thus, there are open files located in this volume and it makes no sense to snapshot this volume. Online redo logfiles are archived or backed up to the log backup volume once the online redo log file is full or a redo log backup is executed. To provide reasonable backup performance, the log backup volume requires a good throughput. To optimize storage costs, it can make sense to consolidate the log-backup-volume of multiple HANA instances. So that multiple HANA instances leverage the same volume and write their backups into different directories. Using such a consolidation, you can get more throughput with since you need to make the volume a bit larger.

The same applies for the volume you use write full HANA database backups to.

Backup

Besides streaming backups and Azure Back service backing up SAP HANA databases as described in the article Backup guide for SAP HANA on Azure Virtual Machines, Azure NetApp Files opens the possibility to perform storage-based snapshot backups.

SAP HANA supports:

- Storage-based snapshot backups from SAP HANA 1.0 SPS7 on

- Storage-based snapshot backup support for Multi Database Container (MDC) HANA environments from SAP HANA 2.0 SPS4 on

Creating storage-based snapshot backups is a simple four-step procedure,

Hana Db Backup Size

- Creating a HANA (internal) database snapshot - an activity you or tools need to perform

- SAP HANA writes data to the datafiles to create a consistent state on the storage - HANA performs this step as a result of creating a HANA snapshot

- Create a snapshot on the /hana/data volume on the storage - a step you or tools need to perform. There is no need to perform a snapshot on the /hana/log volume

- Delete the HANA (internal) database snapshot and resume normal operation - a step you or tools need to perform

Warning

Missing the last step or failing to perform the last step has severe impact on SAP HANA's memory demand and can lead to a halt of SAP HANA

This snapshot backup procedure can be managed in a variety of ways, using various tools. One example is the python script 'ntaphana_azure.py' available on GitHub https://github.com/netapp/ntaphanaThis is sample code, provided 'as-is' without any maintenance or support.

Caution

Hana Database Size Limit

A snapshot in itself is not a protected backup since it is located on the same physical storage as the volume you just took a snapshot of. It is mandatory to 'protect' at least one snapshot per day to a different location. This can be done in the same environment, in a remote Azure region or on Azure Blob storage.

For users of Commvault backup products, a second option is Commvault IntelliSnap V.11.21 and later. This or later versions of Commvault offer Azure NetApp Files Support. The article Commvault IntelliSnap 11.21 provides more information.

Back up the snapshot using Azure blob storage

Back up to Azure blob storage is a cost effective and fast method to save ANF-based HANA database storage snapshot backups. To save the snapshots to Azure Blob storage, the azcopy tool is preferred. Download the latest version of this tool and install it, for example, in the bin directory where the python script from GitHub is installed.Download the latest azcopy tool:

The most advanced feature is the SYNC option. If you use the SYNC option, azcopy keeps the source and the destination directory synchronized. The usage of the parameter --delete-destination is important. Without this parameter, azcopy is not deleting files at the destination site and the space utilization on the destination side would grow. Create a Block Blob container in your Azure storage account. Then create the SAS key for the blob container and synchronize the snapshot folder to the Azure Blob container.

For example, if a daily snapshot should be synchronized to the Azure blob container to protect the data. And only that one snapshot should be kept, the command below can be used.

Sap Hana Database Size

Next steps

Sap Hana Max Database Size

Read the article: